The mode in which we ask survey questions (for example Web or face to face) is important. It influences both who participates in the survey and how they answer. It is now common to combine multiple modes to maximise participation. But, it is not clear how best to mix modes. Is it always beneficial to add another mode of data collection? What is the best order for mixing modes?

In a recent paper published in the Journal of Survey Statistics and Methodology, together with Joe Sakshaug and Trivellore Raghunathan we looked exactly at these two problems.

A study of young drivers in Michigan

The study data come from a longitudinal survey of young adults conducted by the University of Michigan Transportation Research Institute. The survey collected data about from young adults about their driving behavior and attitudes. It experimentally randomized people to either a sequential telephone-mail or to a mail-telephone survey. Additionally, the survey had information regarding the respondents collected earlier in time or from administrative sources.

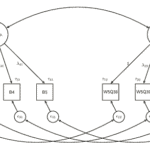

We first look at a question measuring the number of traffic accidents. Because the question was collected both in the survey and in the administrative records we can look separately at measurement error, selection error and total error.

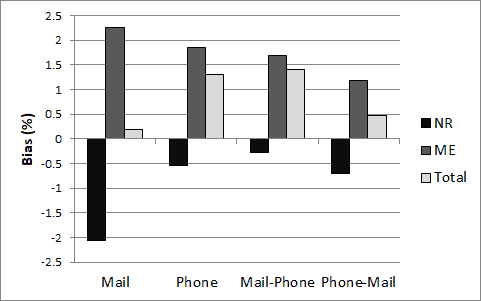

Below we see that the two sources of bias have opposite effects in the starting modes. Individuals in the sample who have had a traffic accident are underrepresented in the respondent pool. Nevertheless, those that participate tended to overreport having had a traffic accident. When comparing the starting modes, nonresponse bias shows a tendency to be larger in the mail mode (-2.06 percent) than in the telephone mode (-0.54 percent). The same pattern is shown for measurement error bias, which is slightly larger in the mail mode (2.25 percent) than in the telephone mode (1.85 percent).

We also see that the telephone-mail mixed mode has lower total bias for the traffic accident question compared to the mail-telephone sequence. This is true even if the second mode has lower non-response bias.

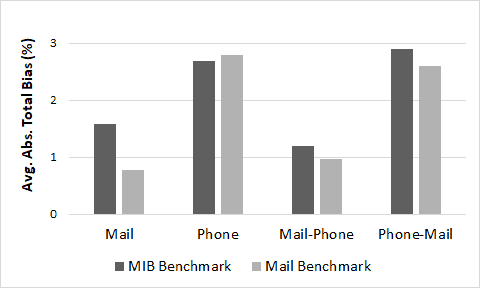

We also compared total bias for nine sensitive questions where we used either the mail answers or a “more is better” (MIB) assumption to look at measurement error. The last two columns of the figure show that the mail-telephone sequence yields smaller average absolute total bias than the telephone-mail sequence. This pattern is evident regardless of how the bias is calculated. In summary, the results suggest that the mail-telephone mode sequence does a better job of minimizing total bias compared to the telephone-mail sequence, on average.

So how should you mix modes?

We found that starting with the telephone mode minimized nonresponse bias, but that starting with mail minimized measurement error bias to a greater extent. So, when planning future surveys we might need to consider what is the main type of error we want to avoid and choose the first mode appropriately.

Secondly, we found consistent patterns suggesting that following up mail nonrespondents with telephone reduced both nonresponse and measurement error bias across a range of demographic and substantive variables. However, the reverse sequence – following up telephone nonrespondents by mail – yielded a less consistent pattern of bias reduction.

So while there is the common conception that adding a mode of data collection leads to overall better data quality that is not always the case.

We also showed that the mail-telephone mode sequence minimized total bias to a greater extent than the telephone-mail sequence, on average. This finding is particularly noteworthy given that both mixed-mode sequences yielded very similar response rates.

So while there is the common conception that adding a mode of data collection leads to overall better data quality that is not always the case.

So it appears that the order in which modes are switched can influence the quality of the data we collect. We find evidence that starting with a self-administered interview mode and switching to an interviewer-administered mode to follow-up with nonrespondents leads to better data quality overall.

While this sequence is typically preferred from a cost and response rate perspective, the finding that it can also minimize both nonresponse and measurement error bias is a reassuring one for survey practice.

You can read more details about the study in our Journal of Survey Statistics and Methodology article. The paper is available under open access here.